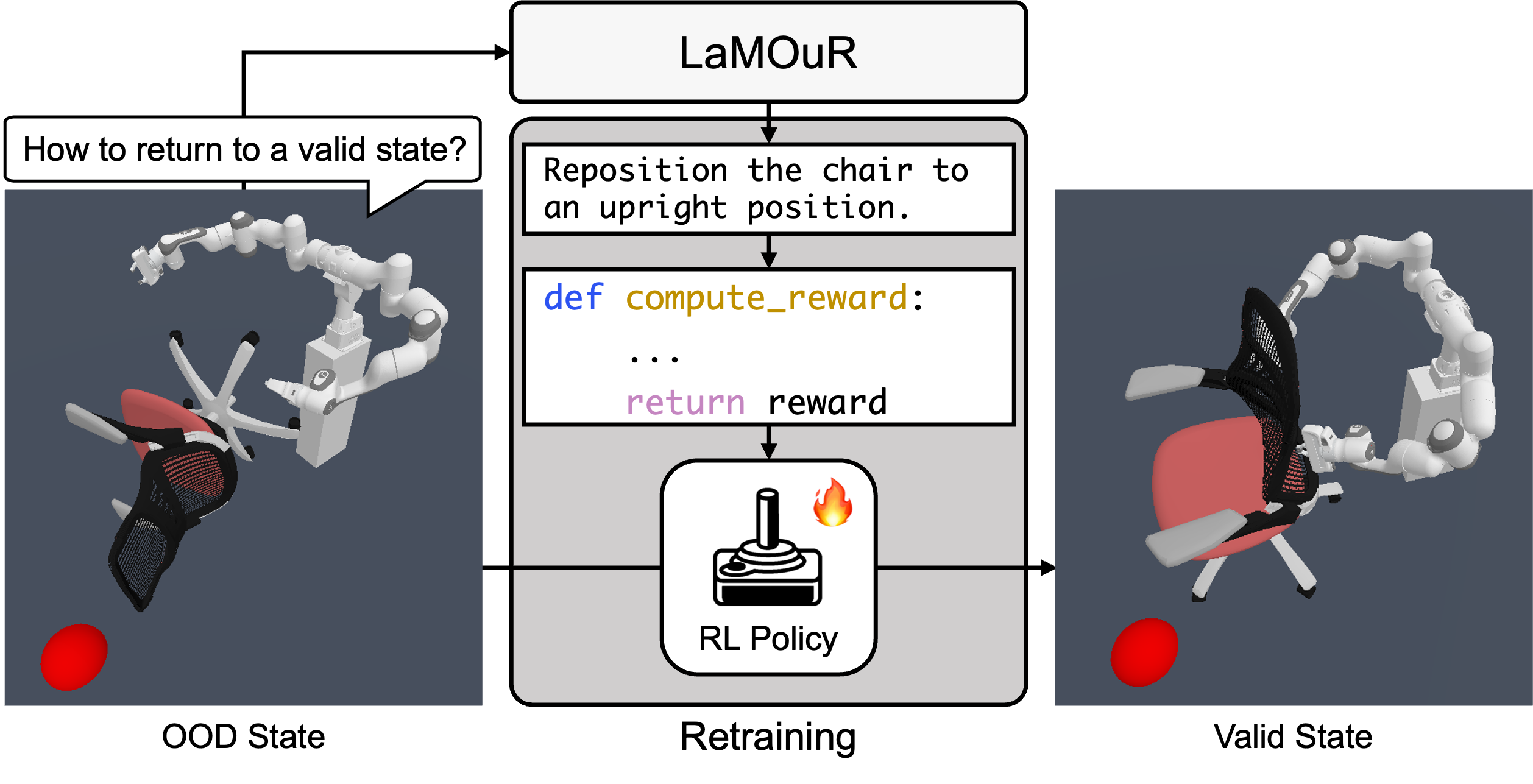

Concept of LaMOuR

LaMOuR generates a dense reward code that guides the agent's recovery from an OOD state. Retraining the policy with this reward enables the agent to return to a state where it can successfully perform its original task.

Deep Reinforcement Learning (DRL) has demonstrated strong performance in robotic control but remains susceptible to out-of-distribution (OOD) states, often resulting in unreliable actions and task failure. While previous methods have focused on minimizing or preventing OOD occurrences, they largely neglect recovery once an agent encounters such states. Although the latest research has attempted to address this by guiding agents back to in-distribution states, their reliance on uncertainty estimation hinders scalability in complex environments. To overcome this limitation, we introduce Language Models for Out-of-Distribution Recovery (LaMOuR), which enables recovery learning without relying on uncertainty estimation. LaMOuR generates dense reward codes that guide the agent back to a state where it can successfully perform its original task, leveraging the capabilities of LVLMs in image description, logical reasoning, and code generation. Experimental results show that LaMOuR substantially enhances recovery efficiency across diverse locomotion tasks and even generalizes effectively to complex environments, including humanoid locomotion and mobile manipulation, where existing methods struggle.

LaMOuR generates a dense reward code that guides the agent's recovery from an OOD state. Retraining the policy with this reward enables the agent to return to a state where it can successfully perform its original task.

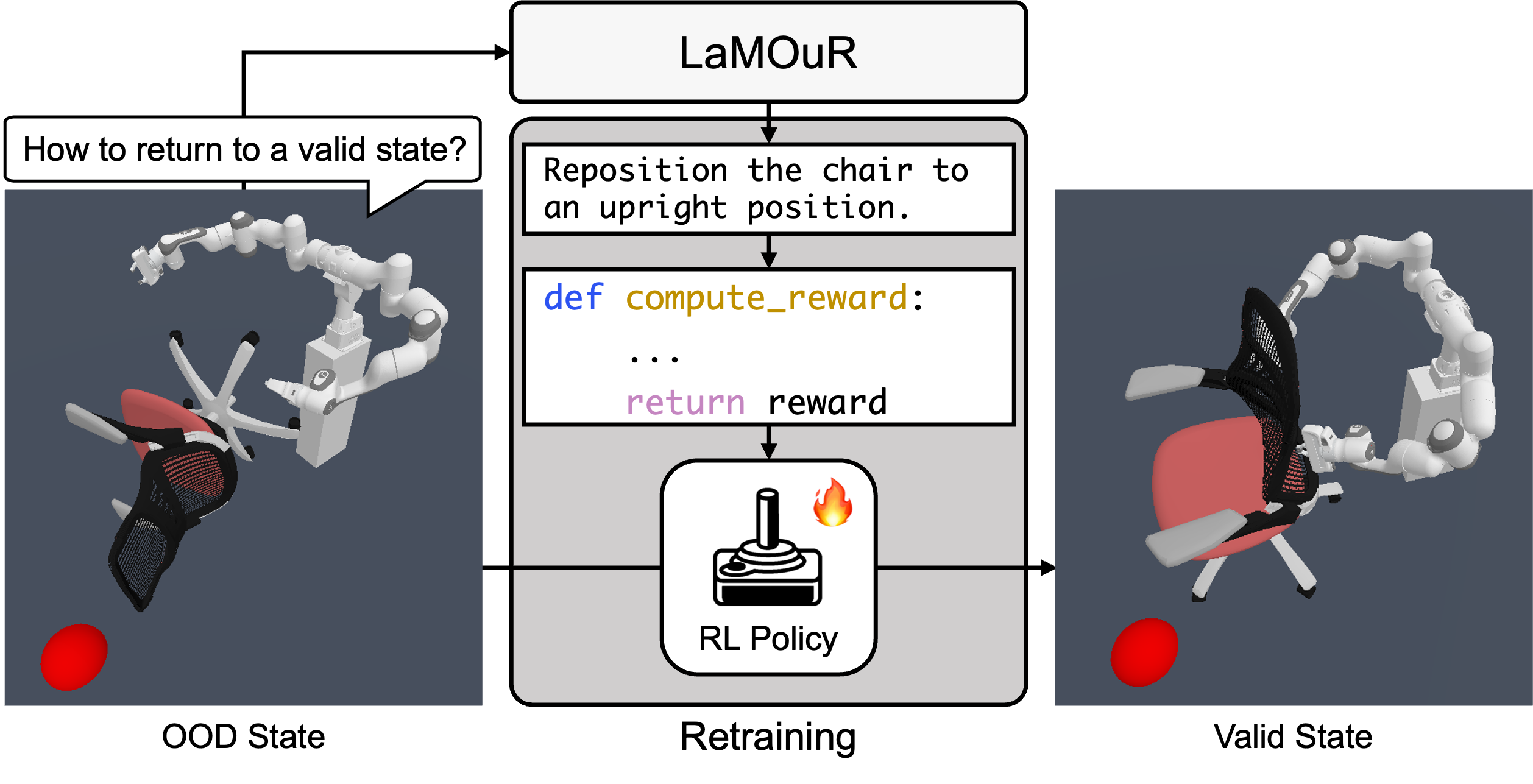

Overview of recovery reward generation in LaMOuR: First, the LVLM generates a description of the OOD state based on the given OOD state image. Next, it infers the recovery behavior needed for the agent to return to a valid state. Finally, the LVLM generates reward and evaluation codes that align with the inferred recovery behavior.

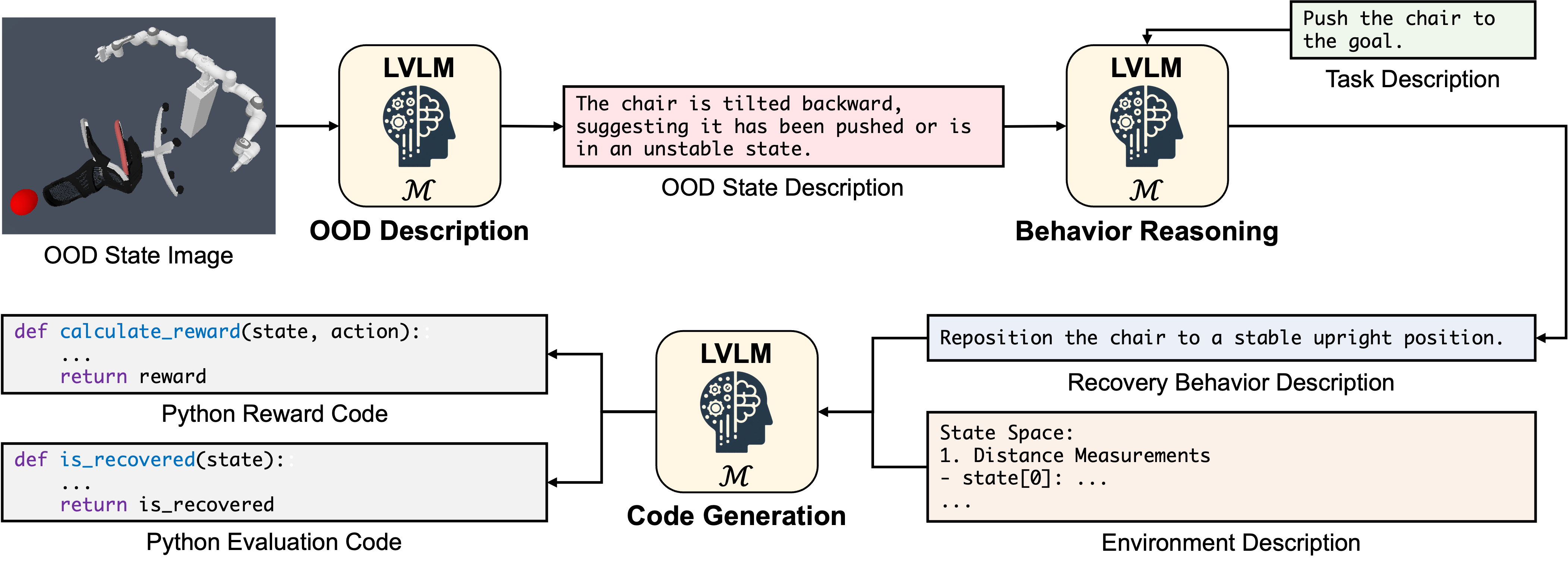

Both our method and the baselines are retrained in OOD states, which the original policy never encounters during its training.

Learning curves for the two complex environments during retraining, evaluated over five episodes every 5000 training steps.

Both our method and the baselines are retrained in OOD states, which the original policy never encounters during its training.

Learning curves for the four MuJoCo environments during retraining, evaluated over five episodes every 5000 training steps. Darker lines and shaded areas denote the mean returns and standard deviations across five random seeds, respectively. The black dashed line represents the average return of the original policy on the original task from a valid state. It is calculated as the average return of the original policy over 100 episodes.

OOD Description Prompt | Behavior Reasoning Prompt | Code Generation Prompt | Few-Shot Example

Ant | HalfCheetah | Hopper | Walker2D | Humanoid | PushChair